Description:

This research examines the mapping of control parameters to sound synthesis/processing parameters from several perspectives. One approach is to consider mappings from a functional analytic perspective. That is, as a continuous function f acting on control space R^n and taking values into sound synthesis space R^m. In this context, the mapping becomes an interpolation problem and further takes on a geometric interpretation. We have assessed existing and potential mapping strategies within this mathematical framework in order to determine the appropriate one for a given musical purpose. For example, we might choose:

-

A regularized spline-based mapping when a perceptually smooth control over a few parameters in which relative rather than absolute position is important, whereas we may find that

-

A simplex-based barycentric interpolation is better when equal (up to scaling factor) and abrupt variation between many parameters and absolute position is desired.

We differentiate between mappings that directly lie in a parameter space (embedding mapping) vs. those that combine, linearly or non-linearly, many parameters in a reduction of data (mixture mapping). Further, we are developing a collection of interpolation functions in Max/MSP for these tasks.

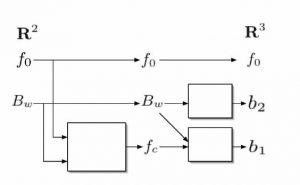

Multi-layer, flow diagram view of 2D to 3D mapping controlling source-filter model

In order to match a mapping function appropriately with the perceptual nature of a musical context, we conduct user studies that test the preference of various geometric control surfaces for different sound synthesis parameter spaces. Both quantitative and qualitative analysis is used to explore the extent to which the geometric nature of the mapping function itself plays a role in the perceptual “feel” of the musical interaction. Additionally, we examine the use of the mapping itself as visual feedback vs. the use of another representation of the musical space.

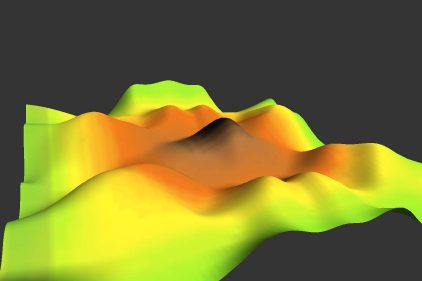

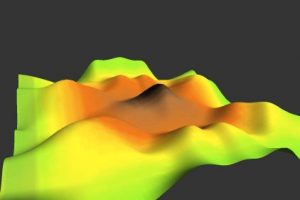

2D control surface embedded in 3D synthesis space

We take another approach to control of sound synthesis through research conducted with Philippe Depalle in the Sound Processing and Control Laboratory. Rather than as a geometric interpolation problem, we consider the control aspect as well as the sound synthesis algorithm together as a dynamical system. Specifically, we use state-space representations and adaptive filtering techniques to estimate and control the internal dynamics of the sound based on the current and past states. From a phenomenological perspective, this allows us to arrive at a mapping constraint based on observable data. As more knowledge of the dynamics of the control/synthesis context is known, this can be incorporated into the system equations.

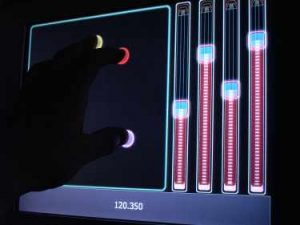

We compare mappings across various controllers, including the Lemur touch-sensitive device

The first geometric-based approach imposes an explicit constraint on an abstract signal model of parameters, and the usefulness of the mapping (as well as its visual representation) is assessed through analysis and user studies. The state space-based approach embeds a physical description of the control/response dynamic within the synthesis algorithm itself. The goal is to use these complementary approaches to arrive at a perceptually meaningful control of sound in which the interaction feels natural and intuitive.

IDMIL Participants:

External Participants:

Prof. Philippe Depalle (SPCL)

Research Areas:

Funding:

- Richard H. Tomlinson Doctoral Fellowship

Publications:

- Van Nort, D., Wanderley, M. M. (2006). The LoM Mapping Toolbox for Max/MSP/Jitter. In Proceedings of the 2006 International Computer Music Conference (ICMC2006). New Orleans, USA.

- Van Nort, D., Wanderley, M. M. (2006). Exploring The Effect of Mapping Trajectories on Musical Performance. In Proc. of the 2006 Sound and Music Computing Conference (SMC2006). Marseille, France.

- Van Nort, D., Depalle, P. (2006). A Stochastic State-Space Phase Vocoder for Synthesis of Roughness. In Proc. of the Int. Conf. on Digital Audio Effects (DAFx2006) (pp. 177–180). Montreal, Quebec, Canada.

- Van Nort, D., Wanderley, M. M. (2007). Control Strategies for Navigation of Complex Sonic Spaces. In Proc. of the 2007 International Conference on New Interfaces for Musical Expression (NIME 2007) (pp. 379–383). New York City, NY, USA.