Description:

This project is an attempt to find ways to integrate force-feedback haptics into audio and multi-media systems. A physical simulation of a virtual environment has been constructed which can be manipulated by a force feedback device. The simulation is initialized via OpenSoundControl from programs such as PureData, Max/MSP, or Chuck. Subsequently, each object can send back messages relating their properties or events such as collisions between objects. This data can then be used to control some form of sound synthesis.

|

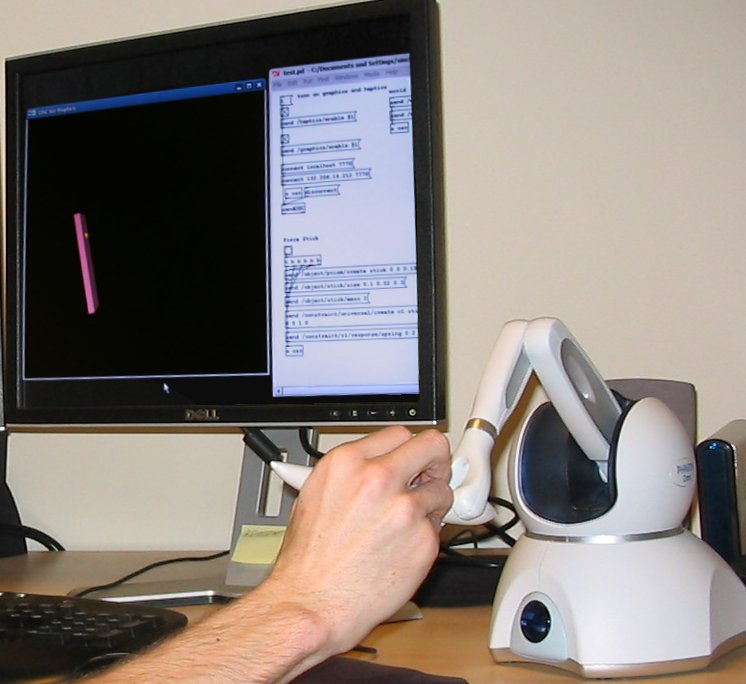

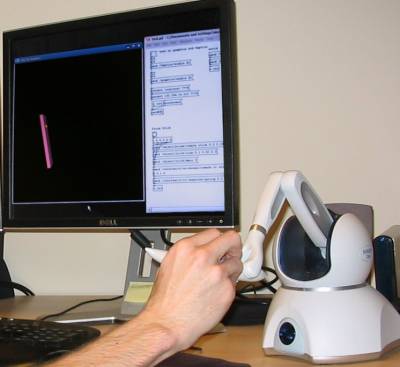

| Force-feedback in conjunction with PureData on a SensAblePhantom Omnidevice. |

IDMIL Participants:

Research Areas:

Funding:

- NSERC

Publications:

- Sinclair, S., Wanderley, M. M. (2009). A run-time programmable simulator to enable multi-modal interaction with rigid-body systems. In Interacting with Computers (pp. 54–63). Elsevier.

- Sinclair, S., Wanderley, M. M. (2007). Extending DIMPLE: a rigid body haptic simulator for interactive control of sound. In Proceedings of the International Conference on Enactive Interfaces (ENACTIVE 2007) (pp. 263–266). Grenoble, France.

- Sinclair, S., Wanderley, M. M. (2007). Using PureData to control a haptically-enabled virtual environment. In Proceedings of the PureData Convention '07. Montreal, Qc, Canada.

- Sinclair, S., Wanderley, M. M. (2007). Defining a control standard for easily integrating haptic virtual environments with existing audio/visual systems. In Proceedings of the 2007 Conference on New Interfaces for Musical Expression (NIME 2007) (pp. 209-212). New York City, NY, USA.