Download available

Download available

Authors:

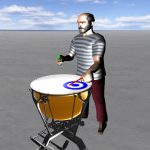

Alexandre Bouënard, Sylvie Gibet, Marcelo M. WanderleyAbstract:

Virtual characters playing virtual musical instruments must interact in real-time with the sounding environment. Dynamic simulation is a promising approach to finely represent and modulate this interaction. Moreover, captured human motion can provide a database covering a large variety of gestures with various levels of expressivity. We propose in this paper a physics-based environment in which a virtual percussionist is dynamically controlled and interacts with a physics-based sound synthesis algorithm. We show how an asynchronous architecture, including motion and sound simulation, as well as visual and sound outputs can take advantage of the parameterization of both gesture and sound that influence the resulting virtual instrumental performance.

Publication Details:

Type: |

Research Report |

Date: |

09/01/2009 |